What is robots.txt and what does it do for your SEO?

The robots.txt file is a text file that tells the search engine spiders (robots) which pages and files to crawl and index on your website. Depending on your website, having a robots.txt file can affect your SEO positively or negatively.

If your site has a robots.txt file, you can usually find it at your website’s root directory. It’s always called robots.txt so if you can’t find it, you don’t have one.

robots.txt basic format

The basic format for robots.txt looks like this:

User-agent: [user-agent name]

Disallow: [URL you don’t want to be crawled]For example, here’s a robots.txt file that will tell all search engines to not crawl the members page:

User-agent: *

Disallow: /members/The asterisk (*) after User-agent means that this robots.txt file applies to all web crawlers that visit the website (Google, Bing, Yahoo, etc.).

You can check any website’s robots.txt configuration by going to name-of-website.com/robots.txt.

Why is Robots.txt important (or is it)?

It depends on your specific use case. The Google, and other search engines are smart enough to index your pages without a robots.txt file. In other words, not having a robots.txt file does not mean that your webpages won’t get indexed.

That said, a robots.txt file is especially useful if there are some specific pages or files that you don’t want to be indexed and you want to make sure that they don’t. By adding a robots.txt file to your site, you can specify filters, to exclude certain URLs and pages.

Most search engines such as Google, Bing, and Yahoo, will respect your robots.txt configuration.

Practical robots.txt examples

The bigger your website is the bigger your odds of having some type of pages and files that you don’t want to be crawled and indexed by the search engines. Let’s look at some practical examples.

Block non-public pages

Admin pages, are the type of URLs that you probably want to block from getting crawled are admin pages or any pages that require authentication/login to access.

If the URL route to your admin dashboard is `yourwebsite.com/admin/, you can block it like this in robots.txt:

User-agent: *

Disallow: /admin/Note: if you’re using WordPress, you should write it like this:

User-agent: *

Disallow: /wp-admin/Block auto-generated pages

If you’re using a CMS like WordPress, you’re probably getting a bunch of pages auto-generated, such as tag pages. These tag pages will often get indexed by the search engines, which can lead to duplicate content issues, which is bad for your SEO. So you probably want to disallow (block) those pages in your robots.txt.

Block specific files and directories

Let’s say that you don’t want search engines to index URLs for PDF documents on your website. You can do that in a couple of ways.

If you want to disallow indexing of a directory on your site containing PDFs, you’d configure your robots.txt file like this:

User-agent: *

Disallow: /pdfs/Or if you’re PDFs are not all contained in a single directory, you can disallow them based on the .pdf file extension:

User-agent: *

Disallow: *.pdfThe asterisk (*) makes sure that every file on your website with a .pdf extension is blocked from getting crawled and indexed.

Manual nofollow links

You can manually tell search engines to not crawl and index specific links by using the rel="nofollow attribute on HTML anchor elements:

Let’s say you have an article, where somewhere you mention “go to your members profile page” and then add a direct link to that non-public page:

<a href="/profile/id/">members profile page</a>This type of link you usually don’t want search engines to crawl, so you would use nofollow on it like this:

<a href="/profile/id/" rel="nofollow">members profile page</a><a href="file-name.pdf" rel="nofollow">Download PDF</a>Manually dofollow links

If you added *.pdf to your disallow list in robots.txt as you saw earlier, all pdfs will get blocked. But what if you want your pdfs to get blocked from crawling in general but not all of them?

Then you can use dofollow in your link attribute for that pdf:

<a href="file-name.pdf" rel="dofollow">Download PDF</a>A simple rule of thumb for robots.txt

Any pages or files that are irrelevant for searchers or search engines should go into your robots.txt files disallow filter.

If you know that a specific directory or file type should never be crawled, use the asterisk (*) to your advantage, and save a lot of manual work.

Google Search Console is your friend

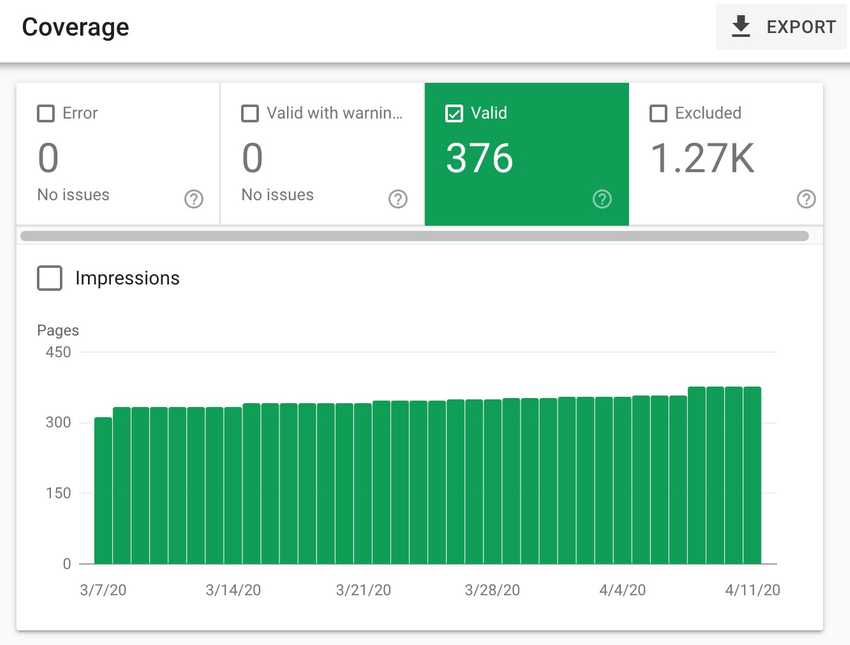

You can check how many pages and files you have indexed by going to the Coverage tab at Google Search Console.

If the number of indexed pages is higher than it should be, and you notice URLs that shouldn’t be indexed, then you should create a robots.txt file for you website.

In summary:

- Robots.txt is not necessary to get your website indexed.

- Robots.txt is practical to filter out/block specific content that you don’t want to be indexed and made searchable on the search engines.

- You can use the

nofollowordofollowattributes on links manually to exclude or include specific content and files. - The bigger and more complex your site is the more useful robots.txt is.